2025 and Beyond Cybersecurity Trends You Should Know

It goes without saying that in cybersecurity, things change very fast! With threats evolving, so does the need for countermeasures to deter these threats. Attackers are utilizing AI now more than ever to automate their attacks. In this post, we'll go over some of those attacks already familiar to us! At the end of this post, we'll have a much better picture of how the trends for this year in cybersecurity affect the cyber landscape! Information is power, the more you know, the more you can do. The more you know about what hackers are doing, the better job you can do in building defenses.

Shadow AI

Shadow AI as described by IBM is the "unsanctioned use of any artificial intelligence (AI) tool or application by employees or end users without the formal approval or oversight of the information technology (IT) department." Employees often turn to these tools to enhance productivity and expedite processes. This stuff is so good and everyone is going to want to do it and everyone is going to do it, that not all of these AI deployments will be authorized, or be the ones that are approved by the organization. Some examples of shadow AI is someone goes into a cloud instance and pulls down a model and starts running away. Another common example is generative AI (gen AI) unauthorized use of applications such as OpenAI's ChatGPT. Since IT teams are unaware of these apps being used, employees can unknowingly expose the organization to significant risks concerning data security, compliance, and the company's reputation. AI being built into mobile phone operating systems raises concerns dealing with data leakage, and misinformation. Such an example of this is Apple Intelligence into smartphones. A malicious actor could conduct an AI model manipulation which is an adversarial attack on AI models which could lead to systemic vulnerabilities. For example, they can manipulate input data where the bad actor could potentially deceive AI systems into creating "forced hallucinations" that turn benign notifications into malicious AI summaries. Data leakage could occur as well since AI systems require vast amounts of data to function effectively. This vast amount of sensitive information can create an attractive target for a bad actor, possibly leading to big data breaches. According to Infosecurity Magazine from 2023 to 2024, "the adoption of generative AI applications by enterprise employees grew from 74% to 96% as organizations embraced AI technologies." Furthermore, today over one-third (38%) of employees "acknowledge sharing sensitive work information with AI tools without their employers' permission." - Infosecurity Magazine. With the complex nature of AI systems, there is an expanded attack surface for not just smartphones but everything else where AI has a footprint. Each component of the AI pipeline from data collection to model execution presents potential entry points for cyber threats.

Deepfakes

You probably may have heard of this term a lot over the past year. Deepfake attacks have dominated news headlines. Attackers are leveraging deepfakes as part of their frequent phishing and social engineering campaigns. But what exactly is a deepfake and how is it being used in phishing and social engineering campaigns? A deepfake is a "piece of synthetic media - an image, video, audio, or text that appears authentic, but has been made manipulated with generative AI models." Very common examples include voice cloning, involving fake speech created with less than a minute of voice samples of real people. This poses a concern where biometric verification is used in industries to access a customer account or service. This was also recently seen in the 2024 democratic primary in New Hampshire, there was a deepfake robocall of Joe Biden's voice calling people telling them they did not need to vote in the primary, they could just save their vote for the general election. Another example are deepfake videos. We saw an attack of this last year where a deepfake was able to emulate and impersonate the CFO of Arup a UK engineering firm. An employee was convinced in wiring $25 million dollars out of the company into the attackers account. This was all done using a deepfake in a video call where the employee thought for sure they were talking to the CFO and therefore following the instructions, when in fact it was a deepfake AI generated impersonation of the actual person where $25 million dollars were lost.

Exploits and Writing Malware

We already know that generative AI is able to write code. Just as it's able to write code, it's also able to write malware. There was a study by IBM that found that ChatGPT 4 was correctly able to, when given an adequate description of one day vulnerabilities, exploit them 87% of the time. That means a bad actor does not even have to know how to write code. They just need to know how to take the information about the description of the problem, put it into the write chatbot, and now they get their exploit and can launch it. We've seen this already in practice. Amazon's CISO CJ Moses in an interview with the Wall Street Journal was asked how many attacks he sees regularly now. CJ stated that on average "750 million attempts are made per day." Previously that number was about "100 million hits per day, and that number has grown to 750 million over six or seven months." CJ was asked if this is a sign that hackers are using AI, which he acknowledged, "Without a doubt. Generative AI has provided access to those who previously didn’t have software-development engineers to do these things. Now, it’s more ubiquitous, such that normal humans can do things they couldn’t do before because they just ask the computer to do that for them."

Prompt Injection

Generative AI is subject to some of the same failings that humans have. It believes a lot of things, it can be naïve, and it can be socially engineered. Prompt Injection is telling a prompt to do things that the originators of the technology did not intend it to do. This way a bad actor can continue to find ways to get the prompt to go beyond what it's intended to do and break its guardrails. According to OWASP (Open Web Application Security Project) this is the number one attack type against LLMs (Large Language Models) which generative AI is based on.

Utilizing AI to Improve Cybersecurity

How can we do a better job in cyber now that we have this AI tool? It's not just an attack surface, or just a negative, let's leverage this tool as well! Some ways that have been talked about is using generative AI in a more passive role, where it's doing analysis and such. We can use gen AI to find out what are the key findings of the reports from security advisories to see if we are affected by any vulnerabilities. For example we can figure out what are the IOC (Indicators of Compromise) associated with these advisories? This is something that gen AI is great at doing and that is summarization. We don't need to spend so much time reading security advisories. We should change our focus in doing less investigation and analyzing code, and instead focus on doing more strategy and architecture. Using AI as a force multiplier is the definitive approach here.

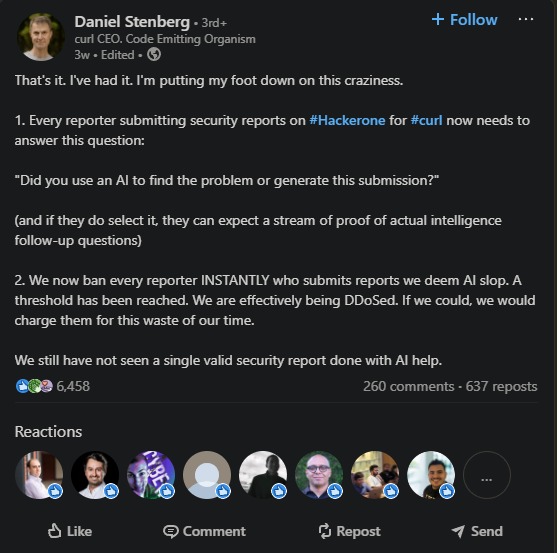

Quantum Safe Crytography & Denial-of-Attention from AI Slop

Ok, I know that covering two trends in one section is overkill, but trust me I will make it quick! Let's start with quantum safe cryptography. Quantum Computers will be able to break our cryptography in the near future. Someone will be able to read all our encrypted messages that we have put out by using a quantum computer. Quantum computers are essentially computers that are able to "complete massively complicated problems at orders of magnitude faster than modern machines. For a quantum computer, challenges that might take a classical computer thousands of years to complete might be reduced to a matter of minutes." We're going to need to start moving towards quantum safe or post quantum crypto algorithms, the ones that will not be susceptible to attack and vulnerable to quantum attacks. We need to act now because there are already people doing what's called harvest now decrypt later. Someone makes a copy of your data right now, then waits for a quantum computer to get strong enough, and then that person can now read what your stuff was. Lastly another trend that recently caught my attention on LinkedIn is what's called denial-of-attention from AI slop. This is when AI is used to find a real bug which then AI is used to generate fake bug reports to prevent them from finding the real bug.

This is essentially a denial of service of the security community where we completely exhaust the community, creating two scenarios where there is not just enough people to review all the bug reports and fix all the bugs reported, or where there are people who are reporting legitimate bugs and illegitimate bugs and legitimate bugs could slip through the cracks because of AI slop. A user even asked a great question on how many of these bogus bug reports the team over at curl are seeing a month, to which Daniel replied that the "rate seems to be increasing".

Information Is Power

I hope this post helped you gain a better understanding of where we stand currently with the cyber landscape, and what are the projections for beyond 2025. Let me know what your projections are. Do you think that there are some missing or that could better be explained? Let's all help each other out, I look forward to knowing more of what you think!